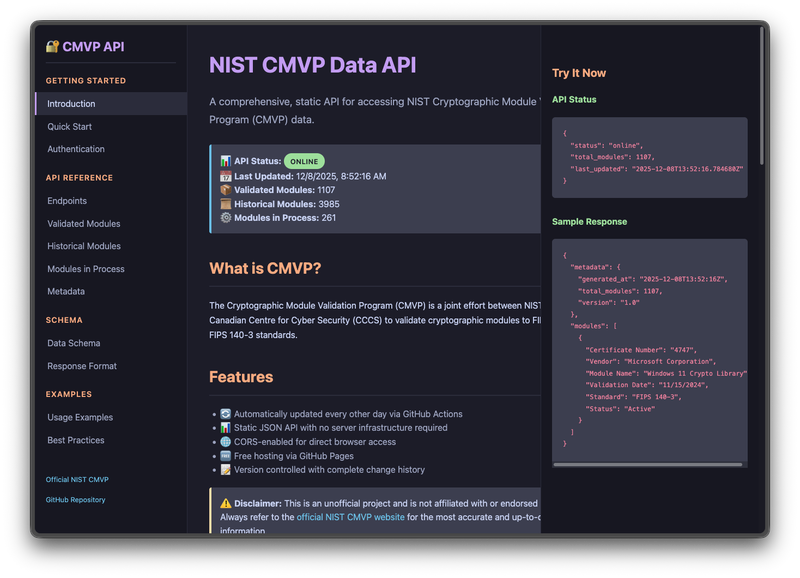

What It Does#

Scrapes NIST’s CMVP database and serves it as a static JSON API via GitHub Pages. Data refreshes weekly via GitHub Actions.

How It Works#

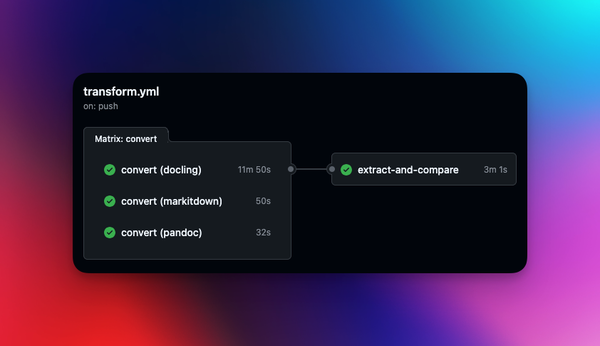

The pipeline runs automatically via GitHub Actions:

flowchart TD

subgraph Trigger

A[Weekly Schedule] --> D

B[Manual Dispatch] --> D

C[Code Push] --> D

end

D[GitHub Actions Runner] --> E[Setup Python + Playwright]

E --> F[Initialize crawl4ai]

F --> G[Run scraper.py]

subgraph Scrape["Scrape NIST CMVP Site"]

G --> H[Active Modules]

G --> I[Historical Modules]

G --> J[In-Process Modules]

G --> K[Algorithm Details]

end

subgraph Generate["Generate JSON Files"]

H --> L[modules.json]

I --> M[historical-modules.json]

J --> N[modules-in-process.json]

K --> O[algorithms.json]

L & M & N & O --> P[metadata.json]

P --> Q[index.json]

end

Q --> R{Changes?}

R -->|Yes| S[Git Commit & Push]

R -->|No| T[Skip]

S --> U[GitHub Pages Deploy]

U --> V[Static API Live]

The scraper uses crawl4ai, an AI-friendly web crawling library built on Playwright. Playwright handles the browser automation needed to navigate NIST’s JavaScript-heavy pages, while crawl4ai provides clean extraction of the module data.

GitHub Actions runs the scraper on a weekly schedule, commits any changes to the repo, and triggers a GitHub Pages deployment. The result is a zero-infrastructure static API that stays current without any servers to maintain.

Data is sourced from NIST’s official CMVP database.

Why#

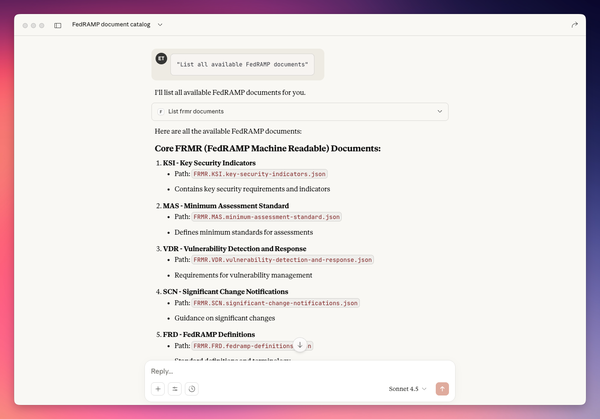

NIST’s CMVP site is clunky to query programmatically. This gives you clean JSON endpoints for:

- Currently validated cryptographic modules

- Historical/expired modules

- Modules in the validation process

Use Cases#

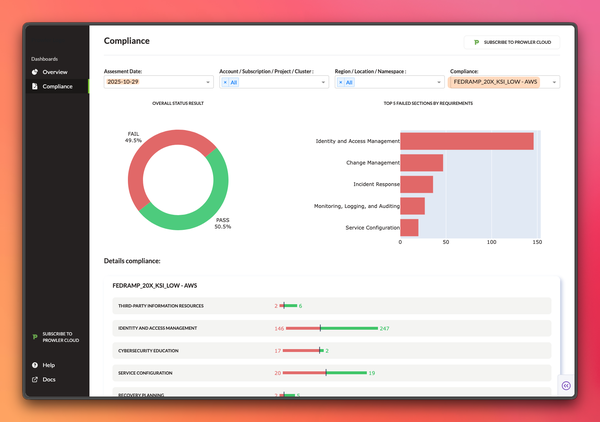

- FedRAMP/FIPS compliance checks

- Vendor crypto module lookups

- Building tools on top of CMVP data (like the NIST CMVP CLI)

Disclaimer#

Unofficial project - not affiliated with NIST. Verify critical info against the official source.